Artificial intelligence has taken off over the last year, presenting opportunities for businesses and other users to benefit from cost savings, the acceleration of key functions, and more. But data indicates that while many companies are also aware of AI’s potential risks, they are not investing in risk management strategies.

Here’s what AI users can do to step up their risk management game.

Embracing AI’s potential

2023 was the year in which businesses began to think seriously about AI, with many testing or evaluating potential applications. In 2024, we’ve seen the proverbial rubber meet the road. Through the first eight months of this year, companies have begun to realize substantial value from AI — especially generative AI.

“After nearly two years of debate, the verdict is in: generative AI (gen AI) is here to stay, and its business potential is massive,” McKinsey declared in a report published earlier this month (opens a new window). “The companies that fail to act and adapt now will likely struggle to catch up in the future.”

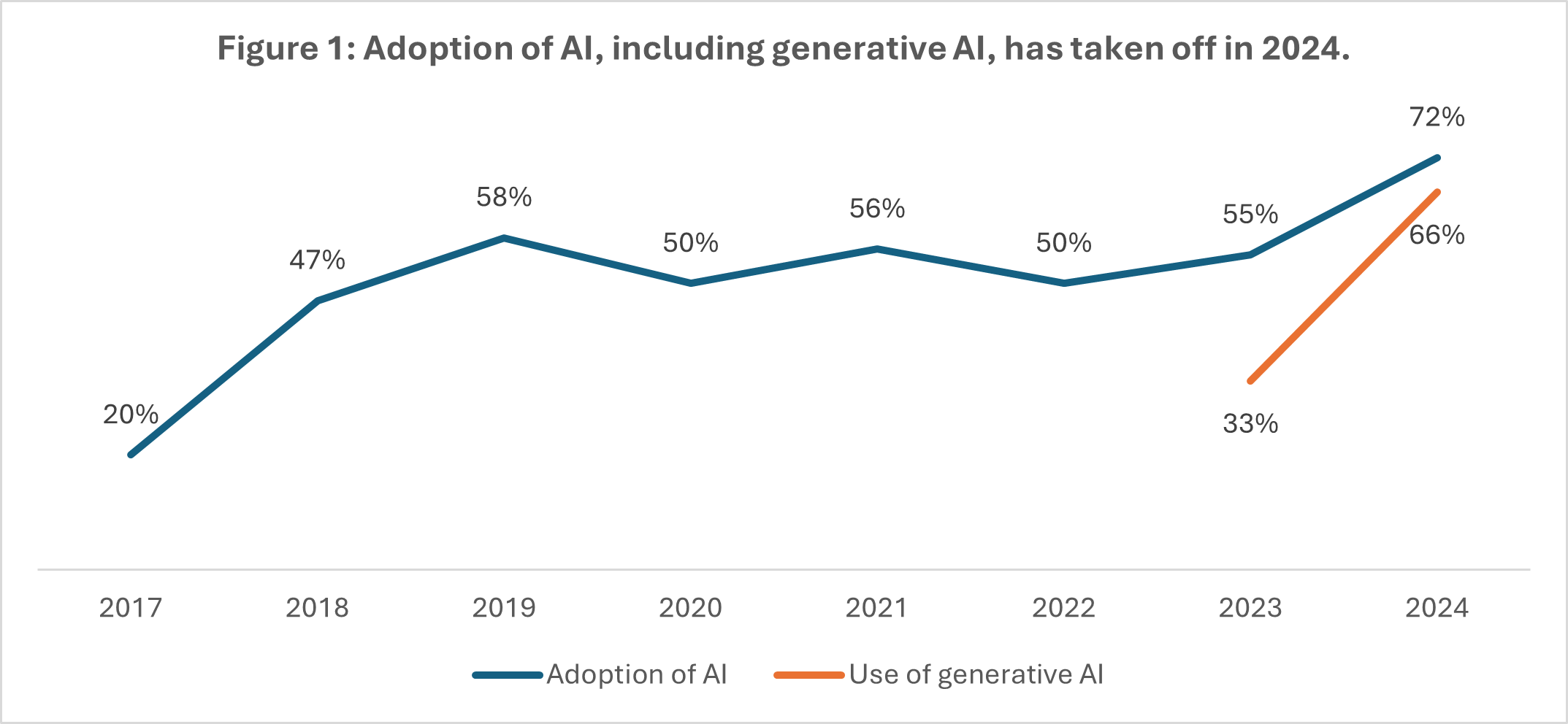

Other McKinsey data highlights how organizations have embraced AI (opens a new window), especially over the last year. Nearly three-quarters (72%) of organizations have now adopted AI in at least one business function, up from 55% in 2023, according to McKinsey (see Figure 1). In 2024, 66% of organizations report using generative AI in at least one business function, double the number of organizations that reported using it in 2023.

Source: McKinsey & Company

What’s more, 67% of respondents surveyed by McKinsey expect their organizations to invest more in AI over the next three years. So, the pressure will be on for businesses to maximize their potential AI opportunities by thinking creatively about how AI can be used to support a range of corporate functions.

The possibilities are seemingly endless. From healthcare and logistics to real estate and private equity, companies across the economy see opportunity in AI. And those opportunities lie in advancing nearly every corporate function, from human resources and finance to supply chain management and information systems.

As organizations take their AI use to the next level, however, the difference between success and failure may lie in whether they can similarly level up their risk management efforts.

Important risks going unaddressed

One sign of organizations’ confidence in AI’s potential is that they are creating new, AI-centric job roles. This is happening at all levels, including within leadership ranks: The number of companies with “Head of AI” positions grew 13% (opens a new window) from December 2022 to November 2023, according to LinkedIn.

Many companies are also noting AI’s potential risks. More than half of all Fortune 500 companies (281) cited AI as a risk factor in their last 10-K filings with the Securities and Exchange Commission (opens a new window), according to a recent report from Arize AI. But even as companies recognize that risk exists, many appear to not be taking action to address them.

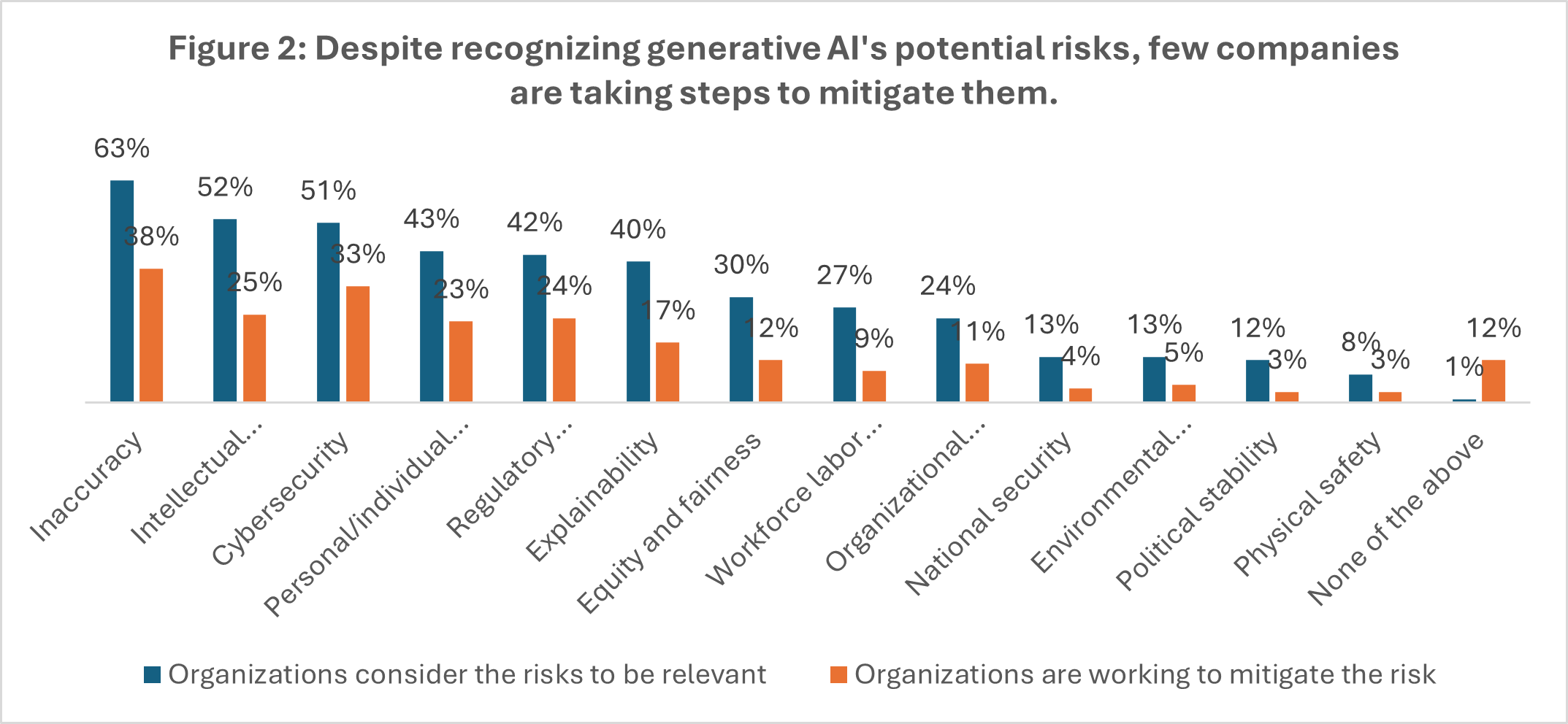

Large numbers of respondents to McKinsey’s survey, for example, recognize the inherent risks they face in using generative AI — chiefly, inaccuracy, intellectual property infringement, and cybersecurity exposures. But far fewer organizations say they are actively working to mitigate those risks (see Figure 2).

Source: McKinsey

Given AI’s ubiquity today, creating a dedicated leadership role — whether at the C-Suite level or just below — can be a good first step to centrally coordinating an organization’s AI efforts, including its risk management practices. But it’s far from the only step organizations can and should be taking.

Looking deeper

Companies that are just getting started with AI should begin by considering some basic questions. For example: What security risks are associated with the AI tools we are considering? What are our regulatory obligations?

But companies that have begun to use AI — especially those that have fully embraced AI — must look deeper, with a focus on corporate governance and enterprise-wide risk management.

Companies exploring broad uses of generative AI, for example, should ask next-level questions. For example:

What data is required to train generative AI tools?

How is that data used and protected?

Is it commingled with customer data, or kept separate?

Who is responsible for monitoring AI tools’ outputs and how they are used?

Is it someone within the organization? Or is it a vendor?

While heads of AI or those with equivalent titles (if companies have created such roles) can play a leading role in answering these questions — and, more broadly, ensuring effective governance over AI-related projects — collaboration with other functions is crucial. Human resources, information security, information technology, and legal departments, among others, should also be involved. If present, risk managers and/or enterprise risk management teams should play central roles as well.

Organizations must also recognize that just as AI is still in its relatively early days, so is AI legislation and regulation. Organizations should monitor the legal and regulatory landscape as it evolves in the months and years ahead, and ensure they understand how their compliance obligations similarly evolve.

Reviewing insurance coverage

Because generative AI can give rise to a variety of risks — including claims that users infringed on others’ intellectual property, discriminated against employees and customers, or violated individuals’ privacy rights — several forms of insurance could respond to AI-related losses. These include cyber, technology errors and omissions (tech E&O), general liability, media lability, and employment practices liability, among other coverages.

Knowing that AI is here to stay and yet still in its relative youth, insurers are carefully monitoring development and potential effects on insured risks. To date, however, carriers are not excluding coverage for AI-related risks from these traditional forms of insurance.

Some stand-alone products designed specifically for AI are emerging, but still in the initial stages — For now, at least, existing coverage should generally respond to various AI-driven losses, as most policies either are silent on AI or provide affirmative coverage for AI.

Companies making use of AI should nevertheless review their existing insurance programs. This is important, as the introduction of modern technology or other changes in an insured’s operations could create risks that were not contemplated when these policies were originally purchased.

Still, it’s important to note that insurers’ attitudes may change over time, especially as potential claims develop. Insurers are already asking questions about AI during renewals, so they can better understand the risks they are potentially taking on.

Organizations using AI should consult with their insurance brokers to evaluate stand-alone AI insurance options and stay ahead of market sentiment regarding AI. AI users should also work with their brokers to optimize language in traditional policies to ensure they have the right protection in place as they seek to capitalize on AI’s promise.